2009-08-27

- [計畫] Hadoop 叢集維護

- [狀況] 發現 hadoop104, hadoop106 kernel panic

- [計畫] Hadoop 叢集維護

- [狀況] 發現 /etc/hadoop/conf/hadoop-site.xml 中 dfs.replication 數值為 1 也就是沒做備份

- [解法]

- 修改 dfs.replication 數值為 3

- 重新執行 hadoop-namenode 與 hadoop-datanode

- 使用 hadoop fs -setrep 設定目前為 1 的 /user 目錄所有檔案

root@hadoop:~# su -s /bin/sh hadoop -c "hadoop fs -setrep -R 3 /user"

- 使用 hadoop balancer 嘗試資料的 replication 機制是否會被執行

root@hadoop:~# su -s /bin/sh hadoop -c "hadoop balancer"

- 使用 hadoop fsck 嘗試資料的 replication 機制是否會被執行

root@hadoop:~# su -s /bin/sh hadoop -c "hadoop fsck / -racks"

### 會有訊息顯示目前的 replication 數目不夠 /user/waue/input/1.txt: Under replicated blk_-682447276956362627_16045. Target Replicas is 3 but found 1 replica(s). ### 自從誤刪 hadoop113 硬碟資料後,HDFS 狀態都是 CORRUPT,看樣子要請大家重新上傳看看了 Status: CORRUPT Total size: 2514937876121 B Total dirs: 2800 Total files: 14972 Total blocks (validated): 51686 (avg. block size 48658009 B) ******************************** CORRUPT FILES: 1921 MISSING BLOCKS: 4972 MISSING SIZE: 232737717270 B CORRUPT BLOCKS: 4972 ******************************** Minimally replicated blocks: 46714 (90.38037 %) Over-replicated blocks: 3 (0.00580428 %) Under-replicated blocks: 45388 (87.81488 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 1.0597067 Corrupt blocks: 4972 Missing replicas: 89401 (163.2239 %) Number of data-nodes: 17 Number of racks: 1 The filesystem under path '/' is CORRUPT

- 跑過 fsck 再跑 balancer 就會看到正在嘗試做資料的 replication 副本動作

root@hadoop:~# su -s /bin/sh hadoop -c "hadoop balancer" 09/08/27 06:42:34 INFO dfs.Balancer: Need to move 12.21 GB bytes to make the cluster balanced. 09/08/27 06:42:34 INFO dfs.Balancer: Decided to move 10 GB bytes from 192.168.1.10:50010 to 192.168.1.17:50010 09/08/27 06:42:34 INFO dfs.Balancer: Decided to move 10 GB bytes from 192.168.1.1:50010 to 192.168.1.19:50010 09/08/27 06:42:34 INFO dfs.Balancer: Decided to move 10 GB bytes from 192.168.1.11:50010 to 192.168.1.16:50010 09/08/27 06:42:34 INFO dfs.Balancer: Decided to move 10 GB bytes from 192.168.1.5:50010 to 192.168.1.14:50010 09/08/27 06:42:34 INFO dfs.Balancer: Decided to move 10 GB bytes from 192.168.1.9:50010 to 192.168.1.18:50010 09/08/27 06:42:34 INFO dfs.Balancer: Will move 50 GBbytes in this iteration 2009/8/27 上午 06:42:34 0 0 KB 12.21 GB 50 GB 09/08/27 07:29:00 INFO dfs.Balancer: Decided to move block -5073029869990347015 with a length of 64 MB bytes from 192.168.1.2:50010 to 192.168.1.15:50010 using proxy source 192.168.1.2:50010 09/08/27 07:29:00 INFO dfs.Balancer: Starting moving -5073029869990347015 from 192.168.1.2:50010 to 192.168.1.15:50010

- [發現] Hadoop 對於 HDFS /var/lib/hadoop/cache 目錄裡的檔案還真是保護到極致了...設定了 10 個 replication 副本

-rw-r--r-- 10 hadoop002 supergroup 108739 2009-08-24 22:55 /var/lib/hadoop/cache/hadoop/mapred/system/job_200908242228_0009/job.jar

- [計畫] Hadoop 叢集維護

- [狀況] 發現把副本設為 3 會造成空間不足,此外因為檔案數多,但都是小檔案,或許不該把 block size 設為 64Mbytes (67108864)

- [解法]

- 把副本設為 2 並該把 block size 設為 4kbytes (4096)

- '由於 Block Size 只有檔案產生的時候才會生效,因此目前看起來除了跑 fsck 外,還要跑 balancer,有搬動過的才有機會變小。其次就是用 hadoop fs -cp /user /user2 比較有機會全面性的造成影響。

09/08/27 14:37:31 INFO dfs.Balancer: Decided to move block -2997460447318763427 with a length of 4 KB bytes from 192.168.1.18:50010 to 192.168.1.2:50010 using proxy source 192.168.1.18:50010 09/08/27 14:37:31 INFO dfs.Balancer: Starting moving -2997460447318763427 from 192.168.1.18:50010 to 192.168.1.2:50010 09/08/27 14:37:31 INFO dfs.Balancer: Decided to move block -2997680912701056691 with a length of 4 KB bytes from 192.168.1.18:50010 to 192.168.1.2:50010 using proxy source 192.168.1.18:50010 09/08/27 14:37:31 INFO dfs.Balancer: Starting moving -2997680912701056691 from 192.168.1.18:50010 to 192.168.1.2:50010 09/08/27 14:37:31 INFO dfs.Balancer: Decided to move block 248102854142724550 with a length of 13.36 KB bytes from 192.168.1.6:50010 to 192.168.1.5:50010 using proxy source 192.168.1.6:50010

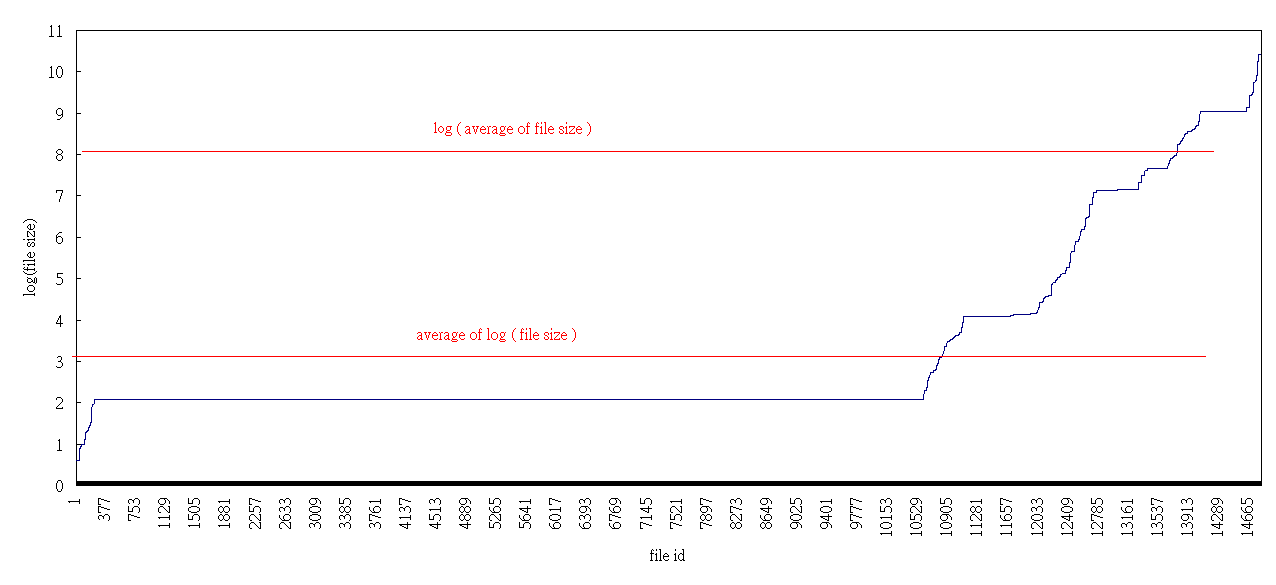

- [統計] 目前平均 file size 大概都落在 1KB 以下。

- [發現] 根據 Hadoop 論壇的討論,發現其實 block size 是由使用者自己(Hadoop Client)決定的。只要在上傳或複製時,用 -D 指定 dfs.block.size 屬性,就可以自訂 block size。底下的範例:

- /user/jazz/input/hadoop-default.xml - 修改前產生的檔案(/etc/hadoop/conf/hadoop-site.xml 的 dfs.block.size = 67108864 , i.e. 64MB)

- /user/jazz/input/hadoop-default.xml.new - 修改後複製產生的檔案(/etc/hadoop/conf/hadoop-site.xml 的 dfs.block.size = 4096 , i.e. 4KB)

- /user/jazz/input/hadoop-default.xml.new - 自訂屬性複製產生的檔案(/etc/hadoop/conf/hadoop-site.xml 的 dfs.block.size = 4096 , i.e. 4KB)

jazz@hadoop:~$ hadoop fs -cp /user/jazz/input/hadoop-default.xml /user/jazz/input/hadoop-default.xml.new jazz@hadoop:~$ hadoop fs -D dfs.block.size=4194304 -cp /user/jazz/input/hadoop-default.xml /user/jazz/input/hadoop-default.xml.4M jazz@hadoop:~$ hadoop fs -stat "filesize=%b block_size=%o filename=%n replication=%r" /user/jazz/input/hadoop-default.xml filesize=40673 block_size=67108864 filename=hadoop-default.xml replication=1 jazz@hadoop:~$ hadoop fs -stat "filesize=%b block_size=%o filename=%n replication=%r" /user/jazz/input/hadoop-default.xml.4M filesize=40673 block_size=4194304 filename=hadoop-default.xml.4M replication=2 jazz@hadoop:~$ hadoop fs -stat "filesize=%b block_size=%o filename=%n replication=%r" /user/jazz/input/hadoop-default.xml.new filesize=40673 block_size=4096 filename=hadoop-default.xml.new replication=1

Last modified 16 years ago

Last modified on Aug 28, 2009, 12:53:37 PM

Attachments (1)

- hadoop_file_size_block_size.png (20.2 KB) - added by jazz 16 years ago.

Download all attachments as: .zip