Using GlusterFS in DRBL Environment

0.Introduction

- Definition

GlusterFS is a free software parallel distributed file system, capable of scaling to several petabytes.

GlusterFS is a powerful network/cluster filesystem. GlusterFS package comes with two components, a server and a client. The storage server (or each in a cluster) runs glusterfsd and the clients use mount command or glusterfs client to mount the exported filesystem. Storage can be kept scaling beyond petabytes as demand increases.

To mount GlusterFS file systems, the client computers need FUSE support in the kernel. Servers can run on any node, without any extra prerequisites. As of date, GlusterFS server is tested on Linux, FreeBSD and Opensolaris, and client runs on only Linux machines. Currently with 1.3.9 release onwards GlusterFS runs both client and server on Mac OS X Leopard.

GlusterFS is one of the few projects which supports different types of communication transports like TCP/IP, InfiniBand? VAPI/Verbs, Sockets Direct Protocol and Unix domain sockets. GlusterFS in wikipedia

- Features

- Very modular design, has each features as translators borrowed from GNU/Hurd operating system. which can be plugged in depending on the users' requirement.

- Automatic File Replication.

- Can use Stripe translator for getting more i/o performance for large files.

- No kernel patches required. So the software can be installed not actually having a downtime of the servers

1.Installation

- Installation Manual

- Install Requirements Packages

$ aptitude install autotools libtool gcc flex bison byacc linux-headers-`uname -r`

- Install GlusterFS patched FUSE Module GlusterFS patched FUSE

$ wget http://ftp.zresearch.com/pub/gluster/glusterfs/fuse/fuse-2.7.3glfs10.tar.gz $ tar -zxvf fuse-2.7.3glfs10.tar.gz $ cd fuse-2.7.3glfs10 $ ./configure --prefix=/usr --enable-kernel-module $ make install $ ldconfig $ depmod -a $ rmmod fuse $ modprobe fuse $ vim /etc/modules fuse

- Install GlusterFS GlusterFS 1.3

$ wget http://ftp.zresearch.com/pub/gluster/glusterfs/1.3/glusterfs-1.3.11.tar.gz $ tar -xzf glusterfs-1.3.11.tar.gz $ cd glusterfs-1.3.11 $ ./configure --prefix= $ make install

2.FS design (Our case-> Using 4 disks for stripe )

- Cloud0 (/dev/sdb1->160G, /dev/sdb2->160G)

$ cat drbl_Cloud.vol ### Cloud Server ### volume brick1 type storage/posix option directory /home/b1 end-volume volume brick2 type storage/posix option directory /home/b2 end-volume volume server type protocol/server subvolumes brick1 brick2 option transport-type tcp/server # For TCP/IP transport option auth.ip.brick1.allow 192.168.1.* option auth.ip.brick2.allow 192.168.1.* end-volume ### Client ### ##Cloud## volume client1 type protocol/client option transport-type tcp/client option remote-host Cloud0 option remote-subvolume brick1 end-volume volume client2 type protocol/client option transport-type tcp/client option remote-host Cloud0 option remote-subvolume brick2 end-volume ##Cloud1## volume client3 type protocol/client option transport-type tcp/client option remote-host Cloud1 option remote-subvolume brick end-volume ##Cloud2## volume client4 type protocol/client option transport-type tcp/client option remote-host Cloud2 option remote-subvolume brick end-volume volume stripe0 type cluster/stripe option block-size *:1MB subvolumes client1 client2 client3 client4 end-volume

- Cloud1 ~ Cloud2 (Cloud1:/dev/sda1 -> 160G ; Cloud2:/dev/sda1 -> 160G)

$ cat drbl_Cloud1_2.vol ### Cloud Server ### volume brick type storage/posix option directory /home/bc end-volume volume server type protocol/server subvolumes brick option transport-type tcp/server # For TCP/IP transport option auth.ip.brick.allow 192.168.1.* end-volume ### Client ### ##Cloud## volume client1 type protocol/client option transport-type tcp/client option remote-host Cloud0 option remote-subvolume brick1 end-volume volume client2 type protocol/client option transport-type tcp/client option remote-host Cloud0 option remote-subvolume brick2 end-volume ##Cloud1## volume client3 type protocol/client option transport-type tcp/client option remote-host Cloud1 option remote-subvolume brick end-volume ##Cloud2## volume client4 type protocol/client option transport-type tcp/client option remote-host Cloud2 option remote-subvolume brick end-volume volume stripe0 type cluster/stripe option block-size *:1MB subvolumes client1 client2 client3 client4 end-volume

3.Test

- Startup GlusterFS

==== Cloud0 ==== $ glusterfs -f drbl_Cloud.vol /home/mnt ==== Cloud1 ==== $ glusterfs -f less drbl_Cloud1_2.vol /home/mnt ==== Cloud2 ==== $ glusterfs -f drbl_Cloud1_2.vol /home/mnt ==== Check GlusterFS status ==== $ less /var/log/glusterfs/glusterfs.log $ ps -aux | grep gluster

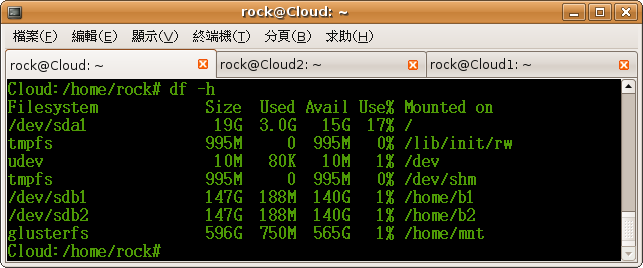

- Check FS Volume

4.Refernece

Attachments (3)

- drbl_Cloud0.png (20.3 KB) - added by rock 17 years ago.

-

drbl_Cloud.vol

(1.1 KB) -

added by rock 17 years ago.

drbl_Cloud.vol

-

drbl_Cloud1_2.vol

(1.0 KB) -

added by rock 17 years ago.

drbl_Cloud1_2.vol

Download all attachments as: .zip