chukwa 研究

眾所周知, hadoop 是運行在分佈式的集群環境下,同是是許多用戶或者組共享的集群,因此任意時刻都會有很多用戶來訪問 NN 或者 JT ,對分佈式文件系統或者 mapreduce 進行操作,使用集群下的機器來完成他們的存儲和計算工作。當使用 hadoop 的用戶越來越多時,就會使得集群運維人員很難客觀去分析集群當前狀況和趨勢。比如 NN 的內存會不會在某天不知曉的情況下發生內存溢出,因此就需要用數據來得出 hadoop 當前的運行狀況。

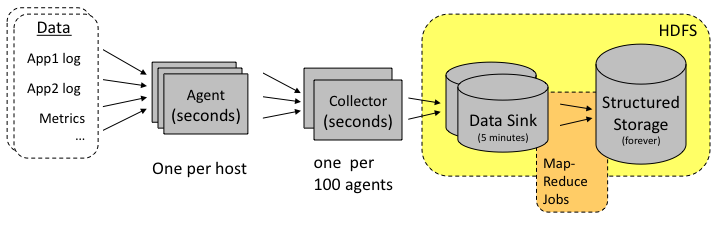

Chukwa 就是利用了集群中的幾個進程輸出的日誌,如 NN,DN,JT,TT 等進程都會有 log 信息,因為這些進程的程序裡面都調用 log4j 提供的接口來記錄日誌,而到底日誌的物理存儲是由 log4j.properties 的配置文件來配置的,可以寫在本地文件,也可以寫到數據庫。 Chukwa 就是來控制這些日誌的記錄,由 chukwa 程序來接替這部分工作,完成日誌記錄和採集工作。 Chukwa 由以下幾個組件組成:

Agents run on each machine and emit data. 收集各個進程的日誌,並將收集的日誌發送給 collector Collectors receive data from the agent and write it to stable storage. 收集 agent 發送為的數據,同時將這些數據保存到 hdfs 上 MapReduce jobs parsing and archiving the data. 利用 mapreduce 來分析這些數據 HICC the Hadoop Infrastructure Care Center; a web-portal style interface for displaying data. HICC 將數據展現出來

- DumpTool 將結果下載保存到 mysql 數據庫

搭建、運行Chukwa要在Linux環境下,要安裝MySQL數據庫,在Chukwa/conf目錄 中有2個SQL腳本 aggregator.sql、database_create_tables.sq l 導入MySQL數據庫,此外還要有Hadoo的HDSF運行環境,

http://blog.csdn.net/lance_123/archive/2011/01/23/6159325.aspx

http://blog.csdn.net/vozon/archive/2010/09/03/5861518.aspx

chukwa 設定chukwa 設定

兩台都要設定

- hadoop 0.20.1 已經run了 [不可 hadoop 0.21.0]

- chukwa 0.4

- ub1 是 jobtracker / namenode

- ub2 chukwa server

$ mkdir /tmp/chukwa $ chown 777 /tmp/chukwa $ sudo apt-get install sysstat $ cd /opt/hadoop $ cp /opt/chukwa/conf/hadoop-metrics.properties.template conf/ $ cp conf/hadoop-metrics.properties conf/hadoop-metrics $ cp /opt/chukwa/chukwa-hadoop-0.4.0-client.jar ./lib/

- 以下都在 conf/

alert

waue@nchc.org.tw

chukwa-collector-conf.xml

<property>

<name>writer.hdfs.filesystem</name>

<value>hdfs://ub2:9000/</value>

<description>HDFS to dump to</description>

</property>

<property>

<name>chukwaCollector.outputDir</name>

<value>/chukwa/logs/</value>

<description>Chukwa data sink directory</description>

</property>

<property>

<name>chukwaCollector.http.port</name>

<value>8080</value>

<description>The HTTP port number the collector will listen on</description>

</property>

jdbc.conf

jdbc.conf.template 改成 jdbc.conf

demo=jdbc:mysql://ub2:3306/test?user=root

nagios.properties

log4j.appender.NAGIOS.Host=ub2

預設值即可不用改

- aggregator.sql

- chukwa-demux-conf.xml

- chukwa-log4j.properties

- commons-logging.properties

- database_create_tables.sql

- log4j.properties mdl.xml

chukwa-env.sh

export JAVA_HOME=/usr/lib/jvm/java-6-sun export HADOOP_HOME="/opt/hadoop" export HADOOP_CONF_DIR="/opt/hadoop/conf"

agents

agents.template ==> agents

ub1 ub2

chukwa-agent-conf.xml

chukwa-agent-conf.xml.template ==> chukwa-agent-conf.xml

<property>

<name>chukwaAgent.tags</name>

<value>cluster="wauegroup"</value>

<description>The cluster's name for this agent</description>

</property>

<property>

<name>chukwaAgent.hostname</name>

<value>localhost</value>

<description>The hostname of the agent on this node. Usually localhost, this is used by the chukwa instrumentation agent-control interface library</description>

</property>

collectors

ub1 ub2

initial_adaptors

$ cp initial_adaptors.template initial_adaptors

log4j.properties

$ vim hadoop/conf/log4j.properties

log4j.appender.DRFA=org.apache.log4j.net.SocketAppender

log4j.appender.DRFA.RemoteHost=ub2

log4j.appender.DRFA.Port=9096

log4j.appender.DRFA.layout=org.apache.log4j.PatternLayout

log4j.appender.DRFA.layout.ConversionPattern=%d{ISO8601} %p %c: %m%n

start 服務

agent / collector

只要在server上執行(ub2)

$ cd /opt/chukwa $ bin/start-all.sh

HICC

只要在server上執行(ub2)

cd /opt/chukwa bin/chukwa hicc

http://localhost:8080 name / pass = admin / admin

還需研究

mysql

安裝 mysql , php , phpmyadmin, apache2

開 database : test 匯入: conf/database_create_tables.sql 其餘還沒測出來

Starting Adaptors

The local agent speaks a simple text-based protocol, by default over port 9093. Suppose you want Chukwa to monitor system metrics, hadoop metrics, and hadoop logs on the localhost:

- Telnet to localhost 9093

以下為舊版的 chukwa 資料,會錯誤

- Type [without quotation marks] "add org.apache.hadoop.chukwa.datacollection.adaptor.sigar.SystemMetrics? SystemMetrics? 60 0"

- Type [without quotation marks] "add SocketAdaptor? HadoopMetrics? 9095 0"

- Type [without quotation marks] "add SocketAdaptor? Hadoop 9096 0"

- Type "list" -- you should see the adaptor you just started, listed as running.

- Type "close" to break the connection. 7.

If you don't have telnet, you can get the same effect using the netcat (nc) command line tool.

Set Up Cluster Aggregation Script

For data analytics with pig, there are some additional environment setup. Pig does not use the same environment variable name as Hadoop, therefore make sure the following environment are setup correctly:

- export PIG_CLASSPATH=$HADOOP_CONF_DIR:$HBASE_CONF_DIR

- Setup a cron job for "pig -Dpig.additional.jars=${HBASE_HOME}/hbase-0.20.6.jar:${PIG_PATH}/pig.jar ${CHUKWA_HOME}/script/pig/ClusterSummary.pig" to run periodically