ps : the full note is recorded at here

- hadoop install

$ sudo su - # apt-get install hadoop-0.18 hadoop-0.18-namenode hadoop-conf-pseudo # apt-get install hadoop-0.18-datanode hadoop-0.18-jobtracker hadoop-0.18-tasktracker

- hadoop namenode format

# su -s /bin/bash - hadoop -c 'hadoop namenode -format'

- use instruction

# su -s /bin/bash - hadoop -c " COMMAND "

- use instruction

- startup

# /etc/init.d/hadoop-namenode start

- setup the conf files

...

(hadoop-env.sh, core-site.xml, hdfs-site.xml, mapred-site.xml)

....

- upgrade cloudera-hadoop-0.18 ---> official-hadoop 0.20

# su -s /bin/bash - hadoop -c " /opt/hadoop/bin/hadoop namenode -upgrade "

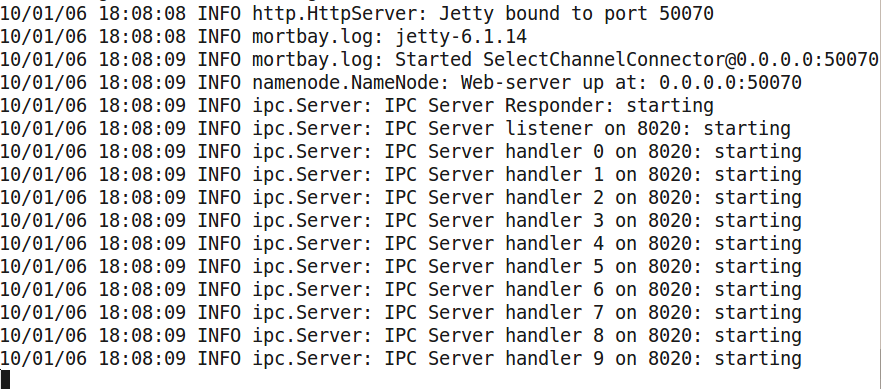

- the successful screen shot :

- but terminal console is handle by hadoop process

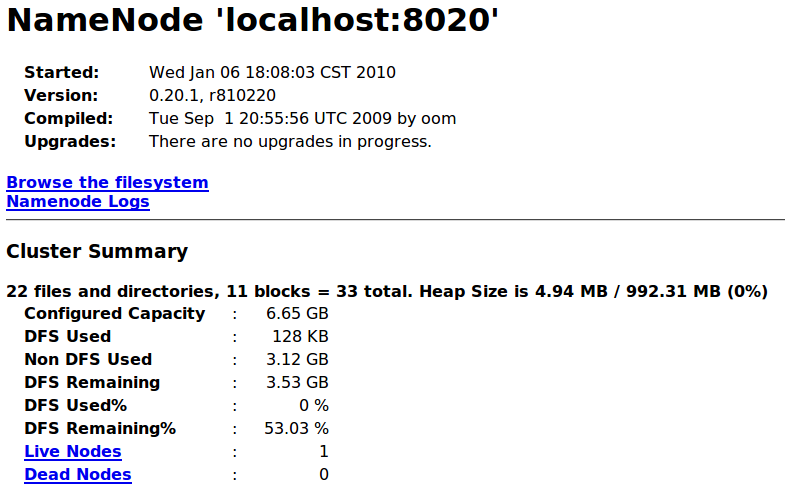

- Right now, our NameNode is in SAFE mode waiting for the DataNodes? to connect.

- Start the rest of HDFS

When the datanodes start, they will connect to the Name Node and an upgrade will be initiated.

# su -s /bin/bash - hadoop -c "/opt/hadoop/bin/hadoop-daemon.sh start datanode"

switch to original handled by hadoop namenode console, there will be appear something upgrade information ..

10/01/06 18:08:09 INFO ipc.Server: IPC Server handler 9 on 8020: starting 10/01/07 17:52:26 INFO hdfs.StateChange: BLOCK* NameSystem.registerDatanode: node registration from 127.0.0.1:50010 storage DS-1591516806-127.0.1.1-50010-1262769597737 10/01/07 17:52:26 INFO net.NetworkTopology: Adding a new node: /default-rack/127.0.0.1:50010 10/01/07 17:52:27 INFO hdfs.StateChange: STATE* Safe mode ON. The ratio of reported blocks 0.1000 has not reached the threshold 0.9990. Safe mode will be turned off automatically. 10/01/07 17:52:27 INFO hdfs.StateChange: STATE* Safe mode extension entered. The ratio of reported blocks 1.0000 has reached the threshold 0.9990. Safe mode will be turned off automatically in 29 seconds. 10/01/07 17:52:47 INFO hdfs.StateChange: STATE* Safe mode ON. The ratio of reported blocks 1.0000 has reached the threshold 0.9990. Safe mode will be turned off automatically in 9 seconds. 10/01/07 17:52:57 INFO namenode.FSNamesystem: Total number of blocks = 10 10/01/07 17:52:57 INFO namenode.FSNamesystem: Number of invalid blocks = 0 10/01/07 17:52:57 INFO namenode.FSNamesystem: Number of under-replicated blocks = 0 10/01/07 17:52:57 INFO namenode.FSNamesystem: Number of over-replicated blocks = 0 10/01/07 17:52:57 INFO hdfs.StateChange: STATE* Leaving safe mode after 85493 secs. 10/01/07 17:52:57 INFO hdfs.StateChange: STATE* Safe mode is OFF. 10/01/07 17:52:57 INFO hdfs.StateChange: STATE* Network topology has 1 racks and 1 datanodes 10/01/07 17:52:57 INFO hdfs.StateChange: STATE* UnderReplicatedBlocks has 0 blocks

Once all the DataNodes? have upgraded, you should see a message that Safe mode will be turned off automatically in X seconds.

- start MapReduce

After you have verified correct operation of HDFS, you are ready to start MapReduce.

# su -s /bin/bash - hadoop -c " /opt/hadoop/bin/start-mapred.sh "

the other console will show fallowing message

10/01/07 18:03:12 INFO FSNamesystem.audit: ugi=hadoop,hadoop ip=/127.0.0.1 cmd=listStatus src=/var/lib/hadoop-0.18/cache/hadoop/mapred/system dst=nullperm=null 10/01/07 18:03:12 INFO namenode.FSNamesystem: Number of transactions: 1 Total time for transactions(ms): 0Number of transactions batched in Syncs: 0 Number of syncs: 0 SyncTimes(ms): 0 10/01/07 18:03:12 INFO FSNamesystem.audit: ugi=hadoop,hadoop ip=/127.0.0.1 cmd=delete src=/var/lib/hadoop-0.18/cache/hadoop/mapred/system dst=nullperm=null 10/01/07 18:03:12 INFO FSNamesystem.audit: ugi=hadoop,hadoop ip=/127.0.0.1 cmd=mkdirs src=/var/lib/hadoop-0.18/cache/hadoop/mapred/system dst=nullperm=hadoop:supergroup:rwxr-xr-x 10/01/07 18:03:12 INFO FSNamesystem.audit: ugi=hadoop,hadoop ip=/127.0.0.1 cmd=setPermission src=/var/lib/hadoop-0.18/cache/hadoop/mapred/system dst=null perm=hadoop:supergroup:rwx-wx-wx 10/01/07 18:03:12 INFO FSNamesystem.audit: ugi=hadoop,hadoop ip=/127.0.0.1 cmd=create src=/var/lib/hadoop-0.18/cache/hadoop/mapred/system/jobtracker.info dst=null perm=hadoop:supergroup:rw-r--r-- 10/01/07 18:03:12 INFO FSNamesystem.audit: ugi=hadoop,hadoop ip=/127.0.0.1 cmd=setPermission src=/var/lib/hadoop-0.18/cache/hadoop/mapred/system/jobtracker.info dst=null perm=hadoop:supergroup:rw------- 10/01/07 18:03:12 INFO hdfs.StateChange: BLOCK* NameSystem.allocateBlock: /var/lib/hadoop-0.18/cache/hadoop/mapred/system/jobtracker.info. blk_-884931960867849873_1011 10/01/07 18:03:12 INFO hdfs.StateChange: BLOCK* NameSystem.addStoredBlock: blockMap updated: 127.0.0.1:50010 is added to blk_-884931960867849873_1011 size 4 10/01/07 18:03:12 INFO hdfs.StateChange: DIR* NameSystem.completeFile: file /var/lib/hadoop-0.18/cache/hadoop/mapred/system/jobtracker.info is closed by DFSClient_-927783387

- finalize the hdfs upgrade

Now that you've confirmed your cluster is successfully running on Hadoop 0.20, you can go ahead and finalize the HDFS upgrade:

# su -s /bin/bash - hadoop -c " /opt/hadoop/bin/hadoop dfsadmin -finalizeUpgrade"

The other console will show fallowing message

10/01/07 18:10:08 INFO common.Storage: Finalizing upgrade for storage directory /var/lib/hadoop-0.18/cache/hadoop/dfs/name. cur LV = -18; cur CTime = 1262772483322 10/01/07 18:10:08 INFO common.Storage: Finalize upgrade for /var/lib/hadoop-0.18/cache/hadoop/dfs/name is complete.

- done

Attachments (3)

- 2010-01-06-181048_959x723_scrot.png (101.5 KB) - added by waue 16 years ago.

- 2010-01-06-181350_881x389_scrot.png (127.4 KB) - added by waue 16 years ago.

- 2010-01-07-181304_785x500_scrot.png (66.9 KB) - added by waue 16 years ago.

Download all attachments as: .zip