hadoop 程式開發 (eclipse plugin) (進階)

個別編譯程式

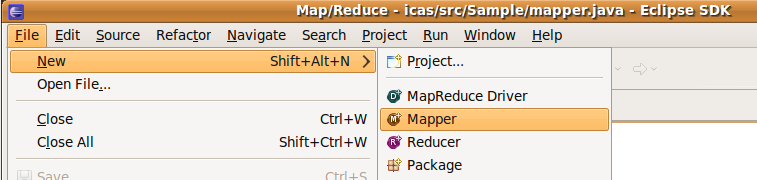

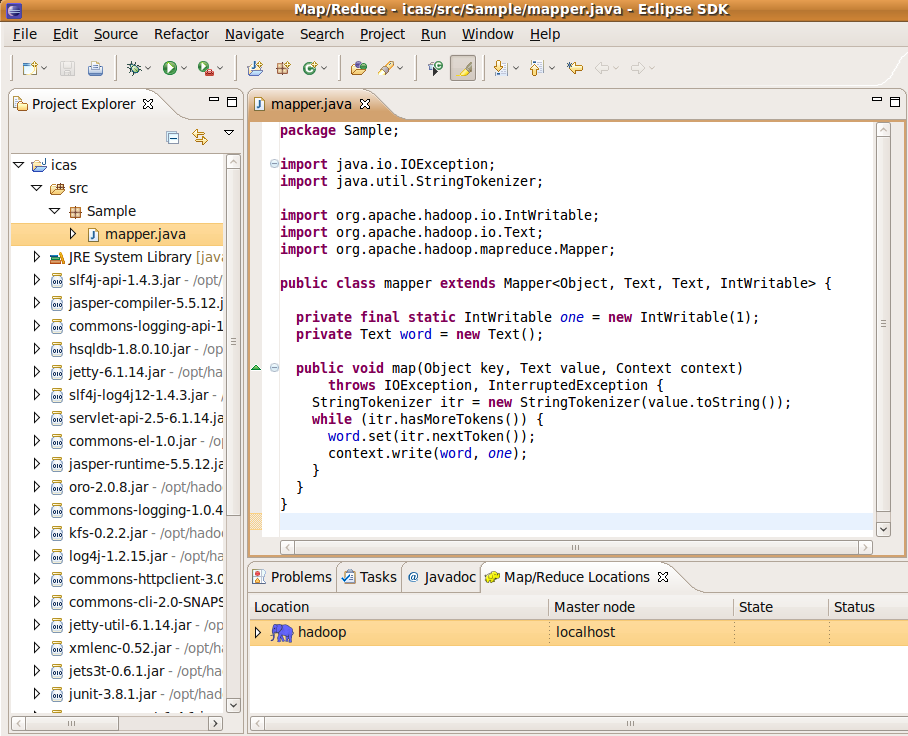

1 mapper.java

- new

File -> new -> mapper

- create

source folder-> 輸入: icas/src

Package : Sample

Name -> : mapper

- modify

package Sample; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapred.MapReduceBase; import org.apache.hadoop.mapred.Mapper; import org.apache.hadoop.mapred.OutputCollector; import org.apache.hadoop.mapred.Reporter; public class mapper extends MapReduceBase implements Mapper<LongWritable, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(LongWritable key, Text value, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException { String line = value.toString(); StringTokenizer tokenizer = new StringTokenizer(line); while (tokenizer.hasMoreTokens()) { word.set(tokenizer.nextToken()); output.collect(word, one); } } }

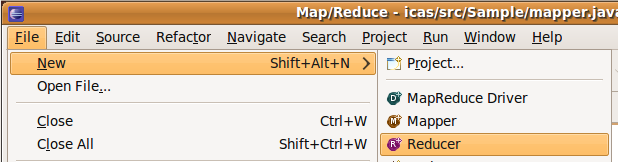

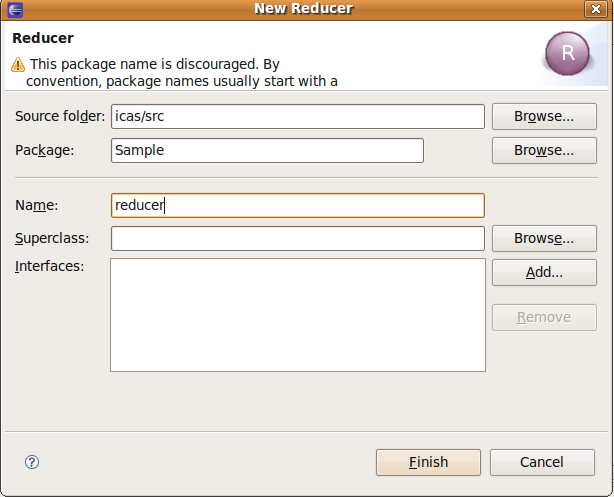

2 reducer.java

- new

- File -> new -> reducer

- create

source folder-> 輸入: icas/src

Package : Sample

Name -> : reducer

- modify

package Sample; import java.io.IOException; import java.util.Iterator; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapred.MapReduceBase; import org.apache.hadoop.mapred.OutputCollector; import org.apache.hadoop.mapred.Reducer; import org.apache.hadoop.mapred.Reporter; public class reducer extends MapReduceBase implements Reducer<Text, IntWritable, Text, IntWritable> { public void reduce(Text key, Iterator<IntWritable> values, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException { int sum = 0; while (values.hasNext()) { sum += values.next().get(); } output.collect(key, new IntWritable(sum)); } }

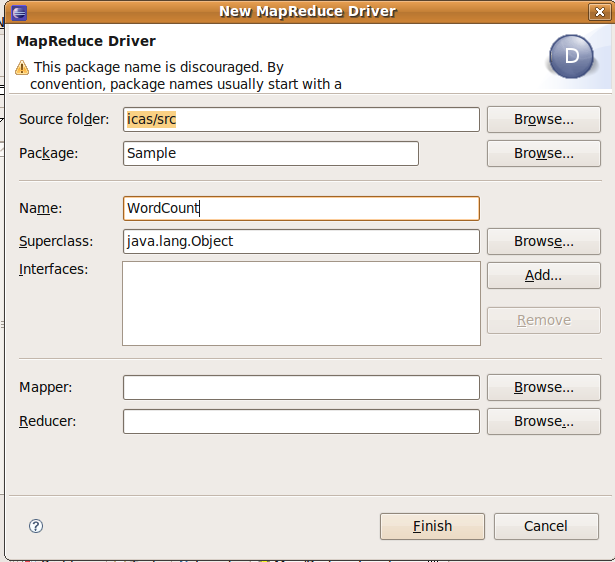

- File -> new -> Map/Reduce? Driver

3 WordCount.java (main function)

- new

建立WordCount.java,此檔用來驅動mapper 與 reducer,因此選擇 Map/Reduce? Driver

- create

source folder-> 輸入: icas/src

Package : Sample

Name -> : WordCount.java

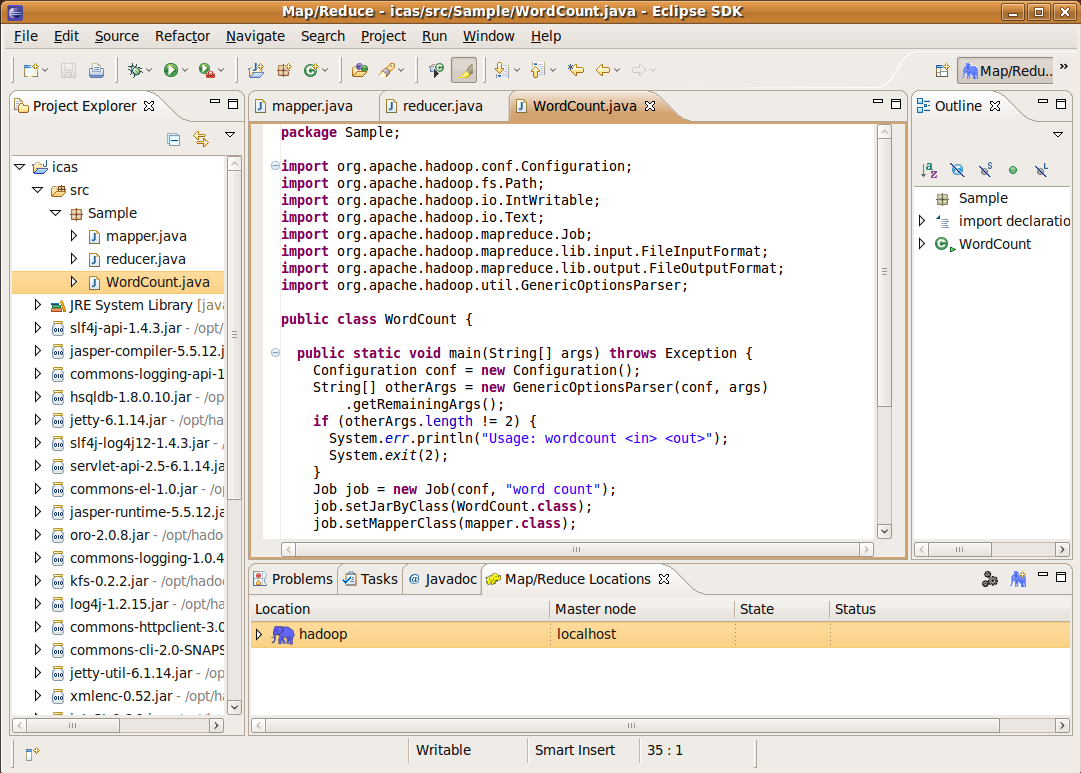

- modify

package Sample; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapred.FileInputFormat; import org.apache.hadoop.mapred.FileOutputFormat; import org.apache.hadoop.mapred.JobClient; import org.apache.hadoop.mapred.JobConf; import org.apache.hadoop.mapred.TextInputFormat; import org.apache.hadoop.mapred.TextOutputFormat; public class WordCount { public static void main(String[] args) throws Exception { JobConf conf = new JobConf(WordCount.class); conf.setJobName("wordcount"); conf.setOutputKeyClass(Text.class); conf.setOutputValueClass(IntWritable.class); conf.setMapperClass(mapper.class); conf.setCombinerClass(reducer.class); conf.setReducerClass(reducer.class); conf.setInputFormat(TextInputFormat.class); conf.setOutputFormat(TextOutputFormat.class); FileInputFormat.setInputPaths(conf, new Path("/user/hadooper/input")); FileOutputFormat.setOutputPath(conf, new Path("lab5_out2")); JobClient.runJob(conf); } }

- 三個檔都存檔後,可以看到icas專案下的src,bin都有檔案產生,我們用指令來check

$ cd workspace/icas $ ls src/Sample/ mapper.java reducer.java WordCount.java $ ls bin/Sample/ mapper.class reducer.class WordCount.class

eclipse 可以產生出jar檔

File -> Export -> java -> JAR file

-> next ->

選擇要匯出的專案 ->

jarfile: /home/hadooper/mytest.jar ->

next ->

next ->

main class: 選擇有Main的class ->

Finish

- 以上的步驟就可以在/home/hadooper/ 產生出你的 mytest.jar

用Makefile 來更快速編譯

- 程式常常修改,每次都做這些動作也很累很煩,讓我們來體驗一下用指令比用圖形介面操作還方便吧

1 產生Makefile 檔

$ cd /home/hadooper/workspace/icas/ $ gedit Makefile

- 輸入以下Makefile的內容 (注意 ":" 後面要接 "tab" 而不是 "空白")

JarFile="sample-0.1.jar" MainFunc="Sample.WordCount" LocalOutDir="/tmp/output" HADOOP_BIN="/opt/hadoop/bin" all:jar run output clean jar: jar -cvf ${JarFile} -C bin/ . run: ${HADOOP_BIN}/hadoop jar ${JarFile} ${MainFunc} input output clean: ${HADOOP_BIN}/hadoop fs -rmr output output: rm -rf ${LocalOutDir} ${HADOOP_BIN}/hadoop fs -get output ${LocalOutDir} gedit ${LocalOutDir}/part-r-00000 & help: @echo "Usage:" @echo " make jar - Build Jar File." @echo " make clean - Clean up Output directory on HDFS." @echo " make run - Run your MapReduce code on Hadoop." @echo " make output - Download and show output file" @echo " make help - Show Makefile options." @echo " " @echo "Example:" @echo " make jar; make run; make output; make clean"

- 或是直接下載 Makefile 吧

$ cd /home/hadooper/workspace/icas/ $ wget http://trac.nchc.org.tw/cloud/raw-attachment/wiki/Hadoop_Lab5/Makefile

2 執行

- 執行Makefile,可以到該目錄下,執行make [參數],若不知道參數為何,可以打make 或 make help

- make 的用法說明

$ cd /home/hadooper/workspace/icas/ $ make Usage: make jar - Build Jar File. make clean - Clean up Output directory on HDFS. make run - Run your MapReduce code on Hadoop. make output - Download and show output file make help - Show Makefile options. Example: make jar; make run; make output; make clean

- 下面提供各種make 的參數

make jar

- 1. 編譯產生jar檔

$ make jar

make run

- 2. 跑我們的wordcount 於hadoop上

$ make run

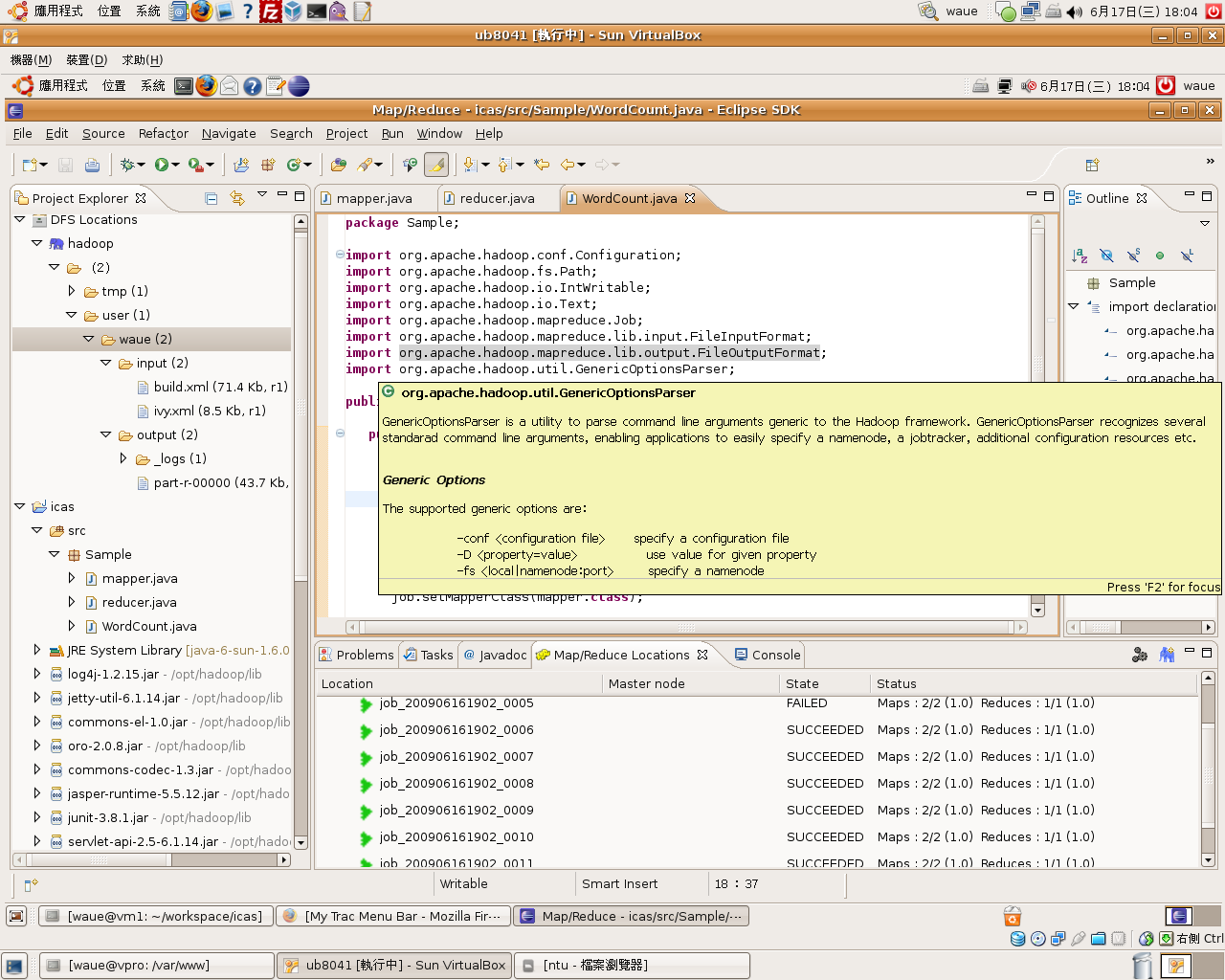

- make run基本上能正確無誤的運作到結束,因此代表我們在eclipse編譯的程式可以順利在hadoop0.18.3的平台上運行。

- 而回到eclipse視窗,我們可以看到下方視窗run完的job會呈現出來;左方視窗也多出output資料夾,part-r-00000就是我們的結果檔

- 因為有設定完整的javadoc, 因此可以得到詳細的解說與輔助

make output

- 3. 這個指令是幫助使用者將結果檔從hdfs下載到local端,並且用gedit來開啟你的結果檔

$ make output

make clean

- 4. 這個指令用來把hdfs上的output資料夾清除。如果你還想要在跑一次make run,請先執行make clean,否則hadoop會告訴你,output資料夾已經存在,而拒絕工作喔!

$ make clean

練習:匯入專案

- 將 nchc-sample 給匯入到eclipse 內開發吧!

Last modified 16 years ago

Last modified on Sep 15, 2009, 4:45:41 PM