| Version 6 (modified by jazz, 16 years ago) (diff) |

|---|

2009-09-02

- hadoop.nchc.org.tw 系統維護

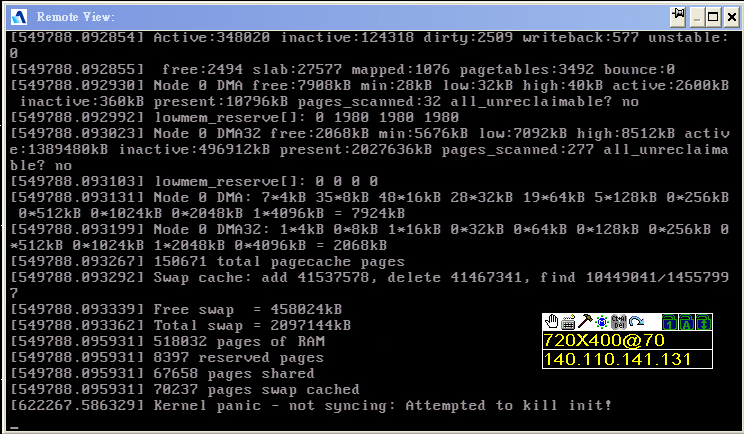

- 近期仍常有 Kernel Panic 問題,懷疑主因是記憶體空間不足。

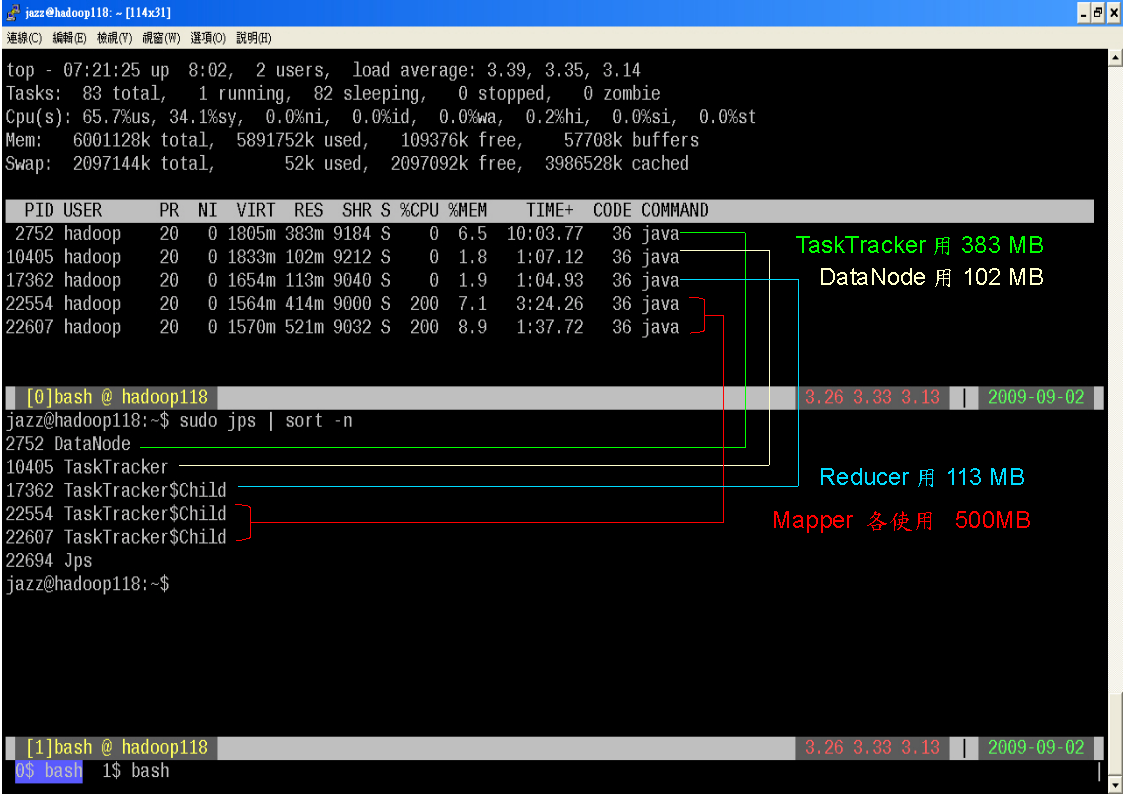

- [追蹤一] HADOOP_HEAPSIZE 曾改為 1500MB 現下修至 1024MB - 實體記憶體只有 2GB,系統本身就會吃掉 1.5GB,若再額外執行 Map/Reduce? 程式,使用 HEAP 1.5GB 就高達 3GB。縱使 SWAP 開 2GB,或許還是無濟於事。

export HADOOP_HEAPSIZE=1024

- [追蹤二] 實際會使用記憶體的程序有:datanode, tasktracker, mapper, reducer,因此 mapper 個數與 reducer 個數也會受限於實際記憶體大小

- 資安教育訓練

jQuery

JSON Database

Web Service

- Ten Things You Didn't Know Apache (2.2) Could Do - 好多沒用過的新模組啊!! Apache 2.2 看樣子鐵定又更肥了 :(

File System

- Metadata Performance of Four Linux File Systems

- 介紹 fdtree 這套檔案系統的效能評比工具

Attachments (4)

- hadoop_kernel_panic.png (224.6 KB) - added by jazz 16 years ago.

- mem_usage_of_hadoop_cluster.png (23.9 KB) - added by jazz 16 years ago.

- hadoop_task_mem_usage.png (212.7 KB) - added by jazz 16 years ago.

- hadoop_mem_usages.xls (16.0 KB) - added by jazz 16 years ago.

Download all attachments as: .zip